PBS PASSPORT

Stream tens of thousands of hours of your PBS and local favorites with WETA+ and PBS Passport whenever and wherever you want. Catch up on a single episode or binge-watch full seasons before they air on TV.

Similar Shows

Poster Image

Overview

Science and Nature

Poster Image

The Age of Nature

Science and Nature

Poster Image

Islands of Wonder

Science and Nature

Poster Image

Power Trip: The Story of Energy

Science and Nature

Poster Image

The Molecule That Made Us

Science and Nature

Poster Image

Inside Nature's Giants

Science and Nature

Poster Image

Life on Fire

Science and Nature

Poster Image

The Gene

Science and Nature

Poster Image

Life from Above

Science and Nature

Poster Image

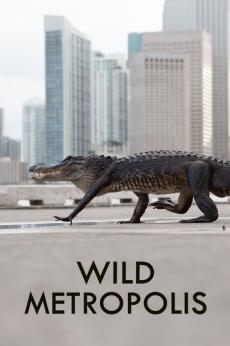

Wild Metropolis

Science and Nature